An Actuary learns Machine Learning - Part 10 - More label encoding / Gradient Boosted Regression15/2/2021

In which we correct our label encoding method from last time, try out a new algorithm - Gradient Boosted Regression - and finally managed to improve our score (by quite a lot it turns out) Source: https://somewan.design This is going to be our final post on the Kaggle House Price challenge, so with that in mind let's quickly recap what we've done in the previous 4 posts. This challenge was our first attempt at a regression problem as opposed to a classification problem, but to be honest the workflow has felt very similar. We defaulted to using a Random Forest algorithm as it's known to produce pretty good results out of the box, and we'd had experience of it before. Interestingly our best model was simply the version in which we fed all the features into the algorithm rather than trying to select which features to use. This has been a bit of a revelation over the last few weeks. If we are choosing the right algorithms, feature selection can easily be handled by the models themselves, and it appears they can do a better job than I can. I looked into whether we can expect an improvement in predictive value by removing outliers, by removing collinearity, or by normalising the data, but largely the info I read online pointed to Random Forests not really expecting much of a predictive performance increase from doing the above, and anytime you process the data there's a chance info is lost which will outweigh any benefits. There would be other ancillary benefits beside predictive increases - in run speed, interpretability, etc. but in terms of raw predictive power increase - not so much. So what's in store for the final post? Firstly, I want to try to correct an issue I think we introduced last time. When we carried our label encoding, we did not order the values properly and I think this could have removed some useful structure from the model. For example, in the training data the feature 'Basement Quality' originally had string including 'Good', 'Average', etc. which we used label encode to become the integers 1-5, but we didn't necessarily do this in the right order. So first order of business is to fix this and encode in a sensible order (Excellent = 1 , good =2, etc.) Second and final task for today is to try out a new algorithm. I thought about building a linear model of some description, but I decided to just stick with another tree based model this time. The model we are going to use is Gradient Boosted Regression from the Sklearn package. In turns out this model immediately gives us a performance boost, and we end up climbing quite a few places on the leader board. Mobile users - rotating to landscape view seems to improve how the code below is rendered.

In [1]:

import os

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from sklearn.ensemble import RandomForestRegressor

from sklearn.model_selection import RandomizedSearchCV

from sklearn.model_selection import cross_val_score

from sklearn.preprocessing import LabelEncoder

from sklearn.ensemble import GradientBoostingRegressor

path = "C:\Work\Machine Learning experiments\Kaggle\House Price"

os.chdir(path)

In [2]:

train_data = pd.read_csv("train.csv")

test_data = pd.read_csv("test.csv")

In [3]:

# The below adjustment taken from the following:

# https://www.kaggle.com/juliencs/a-study-on-regression-applied-to-the-ames-dataset

frames = [train_data, test_data]

total_data = pd.concat(frames)

total_data = total_data.replace({"MSSubClass" : {20 : "SC20", 30 : "SC30", 40 : "SC40", 45 : "SC45",

50 : "SC50", 60 : "SC60", 70 : "SC70", 75 : "SC75",

80 : "SC80", 85 : "SC85", 90 : "SC90", 120 : "SC120",

150 : "SC150", 160 : "SC160", 180 : "SC180", 190 : "SC190"},

"MoSold" : {1 : "Jan", 2 : "Feb", 3 : "Mar", 4 : "Apr", 5 : "May", 6 : "Jun",

7 : "Jul", 8 : "Aug", 9 : "Sep", 10 : "Oct", 11 : "Nov", 12 : "Dec"}

})

total_data = total_data.replace({"Alley" : {"Grvl" : 1, "Pave" : 2},

"BsmtCond" : {"No" : 0, "Po" : 1, "Fa" : 2, "TA" : 3, "Gd" : 4, "Ex" : 5},

"BsmtExposure" : {"No" : 0, "Mn" : 1, "Av": 2, "Gd" : 3},

"BsmtFinType1" : {"No" : 0, "Unf" : 1, "LwQ": 2, "Rec" : 3, "BLQ" : 4,

"ALQ" : 5, "GLQ" : 6},

"BsmtFinType2" : {"No" : 0, "Unf" : 1, "LwQ": 2, "Rec" : 3, "BLQ" : 4,

"ALQ" : 5, "GLQ" : 6},

"BsmtQual" : {"No" : 0, "Po" : 1, "Fa" : 2, "TA": 3, "Gd" : 4, "Ex" : 5},

"ExterCond" : {"Po" : 1, "Fa" : 2, "TA": 3, "Gd": 4, "Ex" : 5},

"ExterQual" : {"Po" : 1, "Fa" : 2, "TA": 3, "Gd": 4, "Ex" : 5},

"FireplaceQu" : {"No" : 0, "Po" : 1, "Fa" : 2, "TA" : 3, "Gd" : 4, "Ex" : 5},

"Functional" : {"Sal" : 1, "Sev" : 2, "Maj2" : 3, "Maj1" : 4, "Mod": 5,

"Min2" : 6, "Min1" : 7, "Typ" : 8},

"GarageCond" : {"No" : 0, "Po" : 1, "Fa" : 2, "TA" : 3, "Gd" : 4, "Ex" : 5},

"GarageQual" : {"No" : 0, "Po" : 1, "Fa" : 2, "TA" : 3, "Gd" : 4, "Ex" : 5},

"HeatingQC" : {"Po" : 1, "Fa" : 2, "TA" : 3, "Gd" : 4, "Ex" : 5},

"KitchenQual" : {"Po" : 1, "Fa" : 2, "TA" : 3, "Gd" : 4, "Ex" : 5},

"LandSlope" : {"Sev" : 1, "Mod" : 2, "Gtl" : 3},

"LotShape" : {"IR3" : 1, "IR2" : 2, "IR1" : 3, "Reg" : 4},

"PavedDrive" : {"N" : 0, "P" : 1, "Y" : 2},

"PoolQC" : {"No" : 0, "Fa" : 1, "TA" : 2, "Gd" : 3, "Ex" : 4},

"Street" : {"Grvl" : 1, "Pave" : 2},

"Utilities" : {"ELO" : 1, "NoSeWa" : 2, "NoSewr" : 3, "AllPub" : 4}}

)

In [4]:

total_data['TotalSF'] = total_data['TotalBsmtSF'] + total_data['1stFlrSF'] + total_data['2ndFlrSF']

features = total_data.columns

features = features.drop('Id')

features = features.drop('SalePrice')

train_data = total_data[0:1460]

test_data = total_data[1460:2919]

In [5]:

pd.options.mode.chained_assignment = None # default='warn'

for i in features:

train_data[i] = train_data[i].fillna(0)

test_data[i] = test_data[i].fillna(0)

Y = train_data['SalePrice']

In [6]:

X = pd.get_dummies(train_data[features])

X_test = pd.get_dummies(test_data[features])

columns = X.columns

ColList = columns.tolist()

missing_cols = set( X_test.columns ) - set( X.columns )

missing_cols2 = set( X.columns ) - set( X_test.columns )

# Add a missing column in test set with default value equal to 0

for c in missing_cols:

X[c] = 0

for c in missing_cols2:

X_test[c] = 0

# Ensure the order of column in the test set is in the same order than in train set

X = X[X_test.columns]

In [7]:

RFmodel = RandomForestRegressor(random_state=1,n_estimators=1000)

RFmodel.fit(X,Y)

predictions = RFmodel.predict(X_test)

In [8]:

scores = cross_val_score(RFmodel, X, Y, cv=5)

scores

[scores.mean(), scores.std()]

Out[8]:

[0.8636834385297311, 0.029980225696624747]

In [9]:

output = pd.DataFrame({'ID': test_data.Id, 'SalePrice': predictions})

output.to_csv('my_submission - V10.csv',index=False)

In [10]:

# Hyperparameters taken from:

# https://www.kaggle.com/serigne/stacked-regressions-top-4-on-leaderboard/notebook

GBoost = GradientBoostingRegressor(n_estimators=3000, learning_rate=0.05,

max_depth=4, max_features='sqrt',

min_samples_leaf=15, min_samples_split=10,

loss='huber', random_state =5)

GBoost.fit(X, Y)

predictions = GBoost.predict(X_test)

In [11]:

scores = cross_val_score(GBoost, X, Y, cv=5)

scores

[scores.mean(), scores.std()]

Out[11]:

[0.8797876494941133, 0.03527391096919843]

In [18]:

output = pd.DataFrame({'ID': test_data.Id, 'SalePrice': predictions})

output.to_csv('my_submission - V10 - GBRessor.csv',index=False)

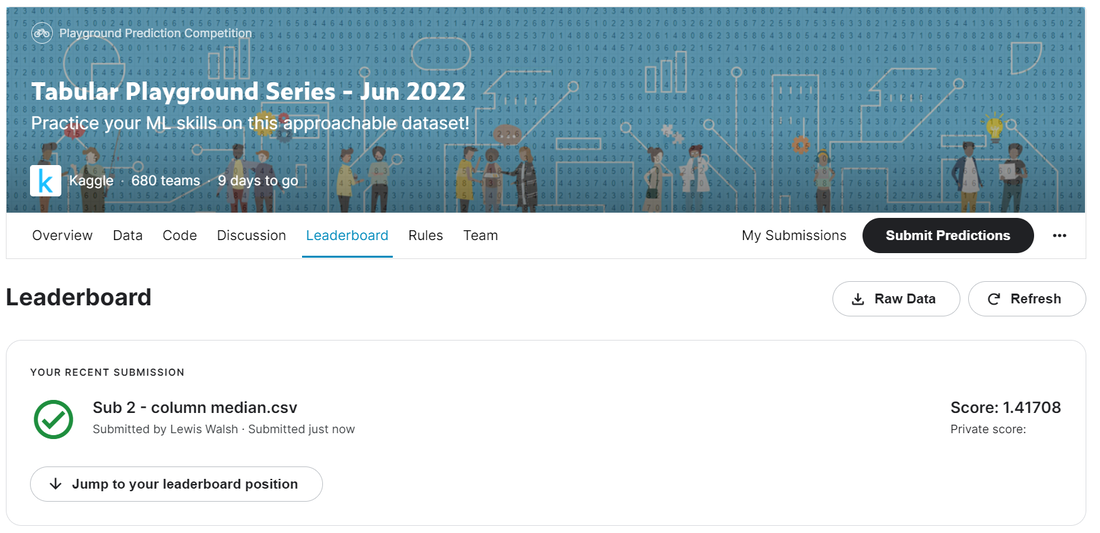

And all that remains is to submit our latest attempt: That's quite a jump! We've moved from about 2800 out of 4900 entries up to 2004 out of 4900, so quite a big jump up the leader board. It seems our gradient boosted regression instantly outperformed the Random Forests model, even without significant tweaking. That's all for today folks. There are a number of different directions I'm interested in taking this machine learning series next. So far we've tried basic classification and regression challenges, and both times focusing on Random Forests. I'm trying to decide between one of the following as my next line of attack:

You'll have to wait until next time to see what we do, as I haven't decided myself yet. |

AuthorI work as an actuary and underwriter at a global reinsurer in London. Categories

All

Archives

April 2024

|

RSS Feed

RSS Feed

Leave a Reply.